| plots | ||

| .gitignore | ||

| debug_distance.py | ||

| eval.py | ||

| generate_data.py | ||

| Makefile | ||

| README.md | ||

| requirements.txt | ||

| train.py | ||

CityBert

CityBert is a machine learning project that fine-tunes a neural network model to understand the similarity between cities based on their geodesic distances. The project generates a dataset of US cities and their pair-wise geodesic distances, which are then used to train the model. Note that this model only considers geographic distances and does not take into account other factors such as political borders or transportation infrastructure.

The project can be extended to include other distance metrics or additional data, such as airport codes, city aliases, or time zones.

Overview of Project Files

generate_data.py: Generates a dataset of US cities and their pairwise geodesic distances.train.py: Trains the neural network model using the generated dataset.eval.py: Evaluates the trained model by comparing the similarity between city vectors before and after training.Makefile: Automates the execution of various tasks, such as generating data, training, and evaluation.README.md: Provides a description of the project, instructions on how to use it, and expected results.requirements.txt: Defines requirements used for creating the results.

How to Use

- Install the required dependencies by running

pip install -r requirements.txt. - Run

make city_distances.csvto generate the dataset of city distances. - Run

make trainto train the neural network model. - Run

make evalto evaluate the trained model and generate evaluation plots.

What to Expect

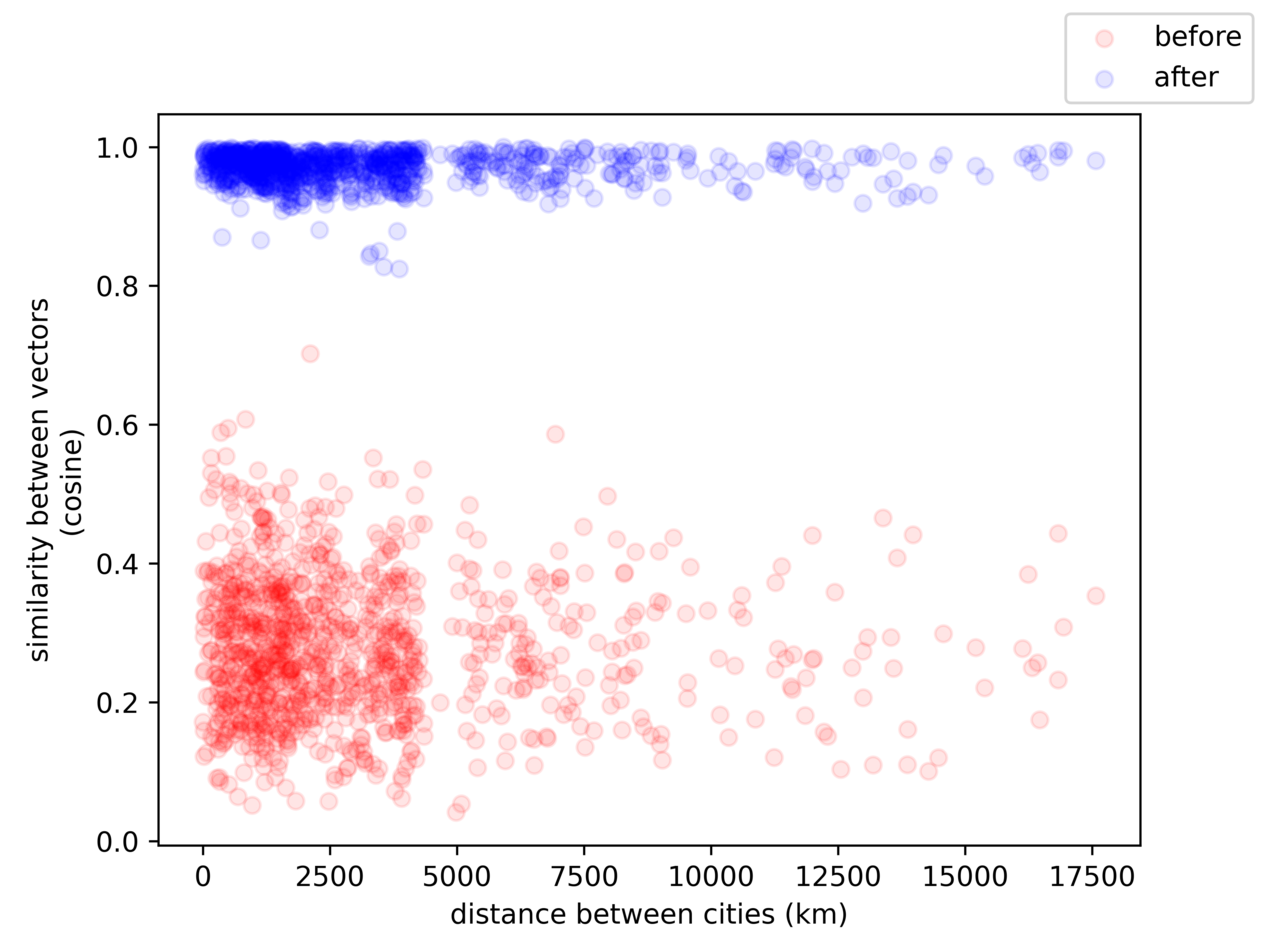

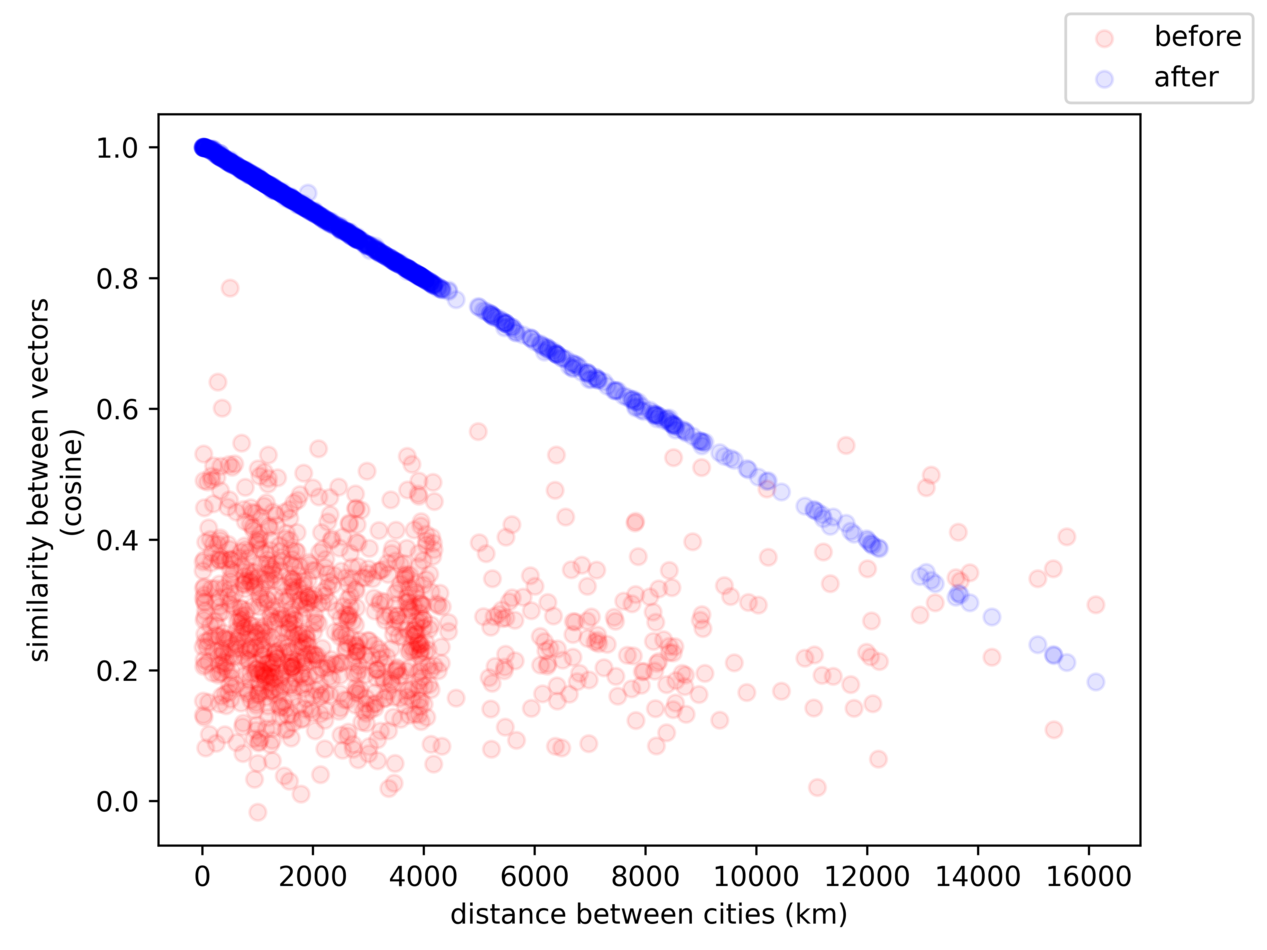

After training, the model should be able to understand the similarity between cities based on their geodesic distances.

You can inspect the evaluation plots generated by the eval.py script to see the improvement in similarity scores before and after training.

These will randomly sample 10k rows from the training dataset and plot the distances before and after training.

The above plot is a placeholder for an actual evaluation plot showing the similarity between city vectors before and after training.

Future Extensions

There are several potential improvements and extensions to the current model:

- Incorporate airport codes: Train the model to understand the unique codes of airports, which could be useful for search engines and other applications.

- Add city aliases: Enhance the dataset with city aliases, so the model can recognize different names for the same city. The

geonamescachepackage already includes these. - Include time zones: Train the model to understand time zone differences between cities, which could be helpful for various time-sensitive use cases. The

geonamescachepackage already includes this data, but how to calculate the hours between them is an open question. - Expand to other distance metrics: Adapt the model to consider other measures of distance, such as transportation infrastructure or travel time.

- Train on sentences: Improve the model's performance on sentences by adding training and validation examples that involve city names in the context of sentences.

- Global city support: Extend the model to support cities outside the US and cover a broader range of geographic locations.

Notes

- Generating the data took about 13 minutes (for 3269 US cities) on 8-cores (Intel 9700K), yielding 2,720,278 records (combinations of cities).

- Training on an Nvidia 3090 FE takes about an hour per epoch with an 80/20 test/train split. Batch size is 16, so there were 136,014 steps per epoch

- TODO: Need to add training / validation examples that involve city names in the context of sentences. It is unclear how the model performs on sentences, as it was trained only on word-pairs.